Etching AI Controls Into Silicon Could Keep Doomsday at Bay

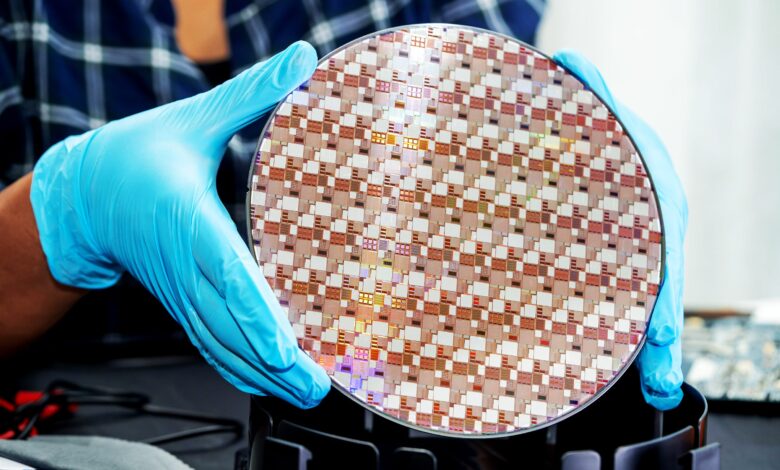

Even the cleverest, most cunning artificial intelligence algorithm will presumably have to obey the laws of silicon. Its capabilities will be constrained by the hardware that it’s running on.

Some researchers are exploring ways to exploit that connection to limit the potential of AI systems to cause harm. The idea is to encode rules governing the training and deployment of advanced algorithms directly into the computer chips needed to run them.

In theory—the sphere where much debate about dangerously powerful AI currently resides—this might provide a powerful new way to prevent rogue nations or irresponsible companies from secretly developing dangerous AI. And one harder to evade than conventional laws or treaties. A report published earlier this month by the Center for New American Security, an influential US foreign policy think tank, outlines how carefully hobbled silicon might be harnessed to enforce a range of AI controls.

Some chips already feature trusted components designed to safeguard sensitive data or guard against misuse. The latest iPhones, for instance, keep a person’s biometric information in a “secure enclave.” Google uses a custom chip in its cloud servers to ensure nothing has been tampered with.

The paper suggests harnessing similar features built into GPUs—or etching new ones into future chips—to prevent AI projects from accessing more than a certain amount of computing power without a license. Because hefty computing power is needed to train the most powerful AI algorithms, like those behind ChatGPT, that would limit who can build the most powerful systems.

CNAS says licenses could be issued by a government or international regulator and refreshed periodically, making it possible to cut off access to AI training by refusing a new one. “You could design protocols such that you can only deploy a model if you’ve run a particular evaluation and gotten a score above a certain threshold—let’s say for safety,” says Tim Fist, a fellow at CNAS and one of three authors of the paper.

Some AI luminaries worry that AI is now becoming so smart that it could one day prove unruly and dangerous. More immediately, some experts and governments fret that even existing AI models could make it easier to develop chemical or biological weapons or automate cybercrime. Washington has already imposed a series of AI chip export controls to limit China’s access to the most advanced AI, fearing it could be used for military purposes—although smuggling and clever engineering has provided some ways around them. Nvidia declined to comment, but the company has lost billions of dollars worth of orders from China due to the last US export controls.

Fist of CNAS says that although hard-coding restrictions into computer hardware might seem extreme, there’s precedent in establishing infrastructure to monitor or control important technology and enforce international treaties. “If you think about security and nonproliferation in nuclear, verification technologies were absolutely key to guaranteeing treaties,” says Fist of CNAS. “The network of seismometers that we now have to detect underground nuclear tests underpin treaties that say we shall not test underground weapons above a certain kiloton threshold.”

The ideas put forward by CNAS aren’t entirely theoretical. Nvidia’s all-important AI training chips—crucial for building the most powerful AI models—already come with secure cryptographic modules. And in November 2023, researchers at the Future of Life Institute, a nonprofit dedicated to protecting humanity from existential threats, and Mithril Security, a security startup, created a demo that shows how the security module of an Intel CPU could be used for a cryptographic scheme that can restrict unauthorized use of an AI model.

That proof of concept is impractical for real AI training, but it shows how a company might provide a model to a customer with the guarantee that the model’s weights—the key features that define how it behaves—cannot be accessed. It also prevents a certain threshold of computing from being exceeded and provides a remote “kill switch” that can be sent to the chip to disable use of the model.

Developing and deploying real “seismometers” or hardware controls for AI will likely prove more difficult, for technical and political reasons. New cryptographic software schemes would need to be developed, perhaps along with new hardware features in future AI chips. These would need to be acceptable to the tech industry while also being expensive and impractical for a foreign adversary with advanced chipmaking gear to bypass. Previous efforts to hobble computers in the name of national security—like the Clipper chip that gave the NSA a backdoor into encrypted messages in the 1990s—have not been big successes.

“For good reasons, many people in tech have a history of opposing similar types of hardware interventions when manufacturers try to implement them,” says Aviya Skowron, head of policy and ethics at EleutherAI, an open source AI project. “The technological advancements required to make the governable chips proposal robust enough are not trivial.”

The US government has expressed interest in the idea. Last October the Bureau of Industry and Security, the division of the Commerce Department that oversees export controls like those imposed on AI chips, issued a request for comment soliciting technical solutions that would allow chips to restrict AI capabilities. Jason Matheny, the CEO of Rand, a nonprofit that advises and works with the US government, told Congress last April that a national security priority should be researching “microelectronic controls embedded in AI chips to prevent the development of large AI models without security safeguards.”