McAfee Rolls Out Deepfake Detector in Lenovo’s New Copilot-Plus PCs

The new tool will help consumers spot fake videos by analyzing their audio behind the scenes.

Deepfakes have come a long way in just the past year, making it increasingly difficult for the average person to figure out what’s real and what’s not.

Thanks to the power of artificial intelligence, cybercriminals and others can now produce surprisingly convincing audio, video and still-photo deepfakes faster and easier than ever before. And experts say those creations could be used to do everything from scam consumers out of their money to swing public opinion ahead of an election.

While much of that problem stems from the rise of AI, security software company McAfee says the solution could lie in AI, too.

The McAfee Deepfake Detector, announced Wednesday, will alert consumers to possible deepfakes they might encounter while browsing the internet or viewing posts in their social media feeds.

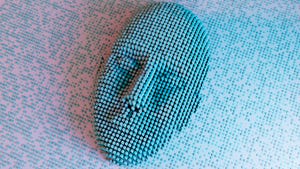

McAfee’s Deepfake Detector points out possible flags signs of a fake video.

McAfee/Screenshot by CNETThe tool will be rolled out on Lenovo’s Copilot-Plus PCs starting today. Just like the antivirus software McAfee is known for, it will be a paid service. Consumers who opt into using it will get a free 30-day trial. After that, plans start at $9.99 for the first year.

While the detector will be powered by AI, all processing will be done on device, both to ensure privacy and prevent latency issues that can occur when data is sent to the cloud, says Steve Grobman, McAfee’s executive vice president and chief technology officer.

Consumer data will not be collected and updates to the tool’s AI will come from the company’s research team, he says.

“We really wanted to arm our customers with a set of tools to help them identify whether something is potentially AI generated,” Grobman says. “But we also wanted to be very mindful of things like privacy and user experience.”

Also, like antivirus, the detector is designed to run quietly in the background. But if it detects something questionable while the user is browsing websites or watching videos on their social media feeds, there will be a pop-up notification. Users can then choose whether to ignore it or click on it for more information.

Right now, the tool only analyzes a video’s audio, so the detector won’t kick in if someone is just scrolling through their Instagram or Twitter feeds without the sound on. And it can’t tell if photos are deepfakes.

The tool’s capabilities will eventually be expanded to do those things, Grobman says. But McAfee decided to start with audio, because while many deepfakes use real videos, they almost always have faked audio.

And while the detector is only available on the new Lenovo computers for now, Grobman says it’s compatible with certain kinds of both Intel and Qualcomm processors, which could allow for future availability on other kinds of PCs and mobile devices.

The idea is to educate people about what they’re seeing. As part of that effort, McAfee is also launching its Smart AI Hub, a website where consumers can go to learn all about AI and deepfake technology.

While you might think getting consumers to pay $10 a year for something like this might be a tough sell, Grobman argues that it’s not a whole lot different than paying for software to keep your computer free of viruses.

He notes that deepfakes are being increasingly used in online scams, pointing to a now-famous deepfake video that made it look like megastar Taylor Swift was endorsing a $10 deal on Le Creuset skillets.

In that kind of scam, there’s no malware for security software to detect, and there’s nothing to stop those who fall for it from sending off their money, cryptocurrency or personal information.

“But if we can help warn a user that, ‘Hey, this is likely AI generated,’ a light bulb might go on for some of them,” he says. “Maybe they’ll save their 10 bucks.”